Why this reality?

Why Do We Observe This Reality and Not Another?

So you might wonder, since every possible reality exists, why do we experience this particular one? Why does it seem to be stable (at least for about 13.8 billion years) and consistent? Why aren’t we observing a random mess of nonsense?

It’s mind boggling to try and grasp the scope of infinite realities and what it even means for some to be more probable than others. Loosely we understand that there are infinite algorithms, plus infinite combinations of starting conditions (data) within each of the infinite algorithms. The algorithms can resemble computer code, state machines, cellular automata, or any other sequence of rules that you can imagine. Really the one thing you can be sure of about this reality is that it contains you. But what other realities might contain a consciousness just like yours? Is it just luck that you observe this reality and not another?

Variations in Starting Conditions Appearing as Probabilistic Chance

I wanted to get an idea of how the infinite variations among realities should appear to sentient beings within those realities. Here's a fairly simple - if contrived - thought experiment to help investigate how that might work.

Suppose we write another simulation containing a simulated being. We have an array of N bits that we can fill with either 1 or 0. We have a counter that increments the array index every second, and, based on the value, changes the simulated sky either black(0) or white(1). We decide to initialize all values to 1.

To the simulated being, at any given second, what is the probability that the sky is white?

Going by my previous blog entry, we have to assume that every possibility of this simulation is an existing reality, whether simulated or not. Even if in our own reality we initialize the sky to always be white, there are at least 2^N existing realities featuring every possible combination of the array contents. That’s in addition to all infinite variations of the rest of the world, but for simplicity, let’s just consider our sky color.

What we Programmed

What the Being Observes?

The graph below shows the plot for if N=16. There are 65,536 possible realities in this scenario. In one of those realities, the simulated being always sees a black sky. In two of them - the already existing reality in the omniverse and the one simulated within our own reality - the being always observes a white sky. The distribution is a bell curve, with the greatest number of realities seeing a white sky 50% of the time, and other variations being progressively less probable as the percentage increases or decreases. Even though each reality’s sky colors were determined from the very start, from the perspective of the beings, it appears to obey probabilistic chance. And for most of them, it’s not 50%. It is almost like if no matter how many times you flipped a coin, it only turned up heads, say, 37.5% of the time. Can you imagine the confusion of beings living in such a world, trying to explain such a fundamental element of their universe?

Probability of White Sky With Size N = 16 Array

Here’s another interesting thing to think of: What if we encode an ascii message in the sky color, and use it to establish a dialogue with the simulated entities? Effectively this gives us 2^N bits of text to “chat” with. With a large enough N, we could have a long, meandering conversation covering many topics. Interestingly, even if we never simulated this, there would still be a 1/2^N chance that the simulated beings would have this chat, fully believing there to be intelligent entities at the other end. It’s one of the 2^N realities discussed above. Add it to our own reality, there is a total 2/2^N chance that they observe this conversation happening.

Is Sentience the Only Constant?

Of course, the sky isn’t the only variable; there would be variations in the entire world around the being as well as the code running it. From the perspective of these beings, the only constant is their own existence. Let’s see if we can find a way to approximate these variations while leaving the being intact. The idea is to get a more accurate idea of how, from their perspective, probability affects the whole world.

Our simulated intelligence would probably be based on a neural network, or something like it. The network operates by taking sensory input - visual, auditory, touch, smell, taste etc. We might code these as a huge collection of numbers, with the visual field being a big array of rgb values, the audio a stream of magnitudes, and so on. They update once every millisecond. If there are M total bits of data cross all inputs (with M being a pretty big number) there would be 2^M possible variations our entity can be observing at any given millisecond.

Of course, we program a nice simulation with pleasant living conditions for our being. But what is the probability that they observe it? For each millisecond, there are still 2^M other realities where the same entity observes random nonsense. Over T milliseconds, this expands to 2^(M * T). Unfortunately for such beings, only 2/2^(M * T) will observe the pleasant living conditions we made for them. The rest observe all possibilities of random inputs.

What we Programmed

What the Being Observes?

Could these distressing finds apply this to ourselves? After all, our minds may not be as simple as a neural net program, but they still boil down to an algorithm (i.e. laws of physics and biochemistry) processing inputs (using the rods and cones of our eyes, hair cells of our ears, sensory neurons in our gut, etc, etc). If we are anything like the beings in our thought experiment, there should be a near infinite number of realities that describe our own minds but adrift in worlds of nonsense . We should only experience a coherent existence a tiny percent of the time. And that tiny percent that survive all this should still possess enormous variations from moment to moment, the environment shifting randomly. Why doesn’t this happen?

Given the somewhat terrifying implications, I think it’s something we really ought to be thinking about. But it gets even more complicated.

The Algorithm Itself Appears Randomized

The above examples largely discount the rules or “code” of the algorithm. Each rule would have infinite variations, and there would be an infinite combination of rules being added or removed from the simulation. In those realities where these additions don’t prevent the entity from existing, they would be meddling with everything else in their universe, and not necessarily in a way that we can make a nice bell curve to describe.

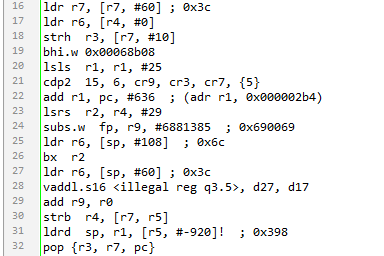

I nevertheless felt it would be instructive to generate some completely random binary instructions and disassemble them, just to get a feel for how it would look. Nothing I generated could be decompiled into sensible C code or the like, but some of the fruits of my labor (in Arm 7 assembly) can be seen below.

Completely Random Arm 7 Assembly

If you’re not a programmer, you may be thinking this gibberish is intelligible to somebody with the proper experience, but it isn’t. It’s nonsense, the coding equivalent of random television static.

We also don’t really know the possible extent of the kinds of algorithms that can exist . Does it make a difference if it’s a Lisp program, a C program, a cellular automata, or something else? In the probability space of all realities, are some or all realities reduceable to one another, and if so, does this affect their probability? For instance, we know that all computer algorithms can be implemented on a Turing machine, with lambda calculus, or any number of other Turing machine equivalents we’ve come up with over the years. So for the purposes of determining how many realities exist, and the probability of experiencing any one, do they all count as a single algorithm, with the starting conditions (the symbols on the Turing machine’s “tape”) being the only variable? I don’t know. All I have is suspicions, which I will present to you.

So,

Why Isn’t Our Reality a Random Mess of Nonsense?

Here are some ideas to start.

Perhaps Some Algorithms/Realities are Inherently More Resilient

In the thought experiments above, I simplified things so that everything observable to the simulated beings is encoded in numbers, binary ones at that. You could reduce all of the inputs to one giant number from 0 to 2^N - 1. There would be an even distribution of these realities, with only a tiny a percentage where something sensible arises. The code for the simulated being and its world itself also likely has a huge probability distribution, especially if we consider the machine code for it, which itself is simply numbers.

But there may be algorithms in which, even considering every possible variation, a simulated entity would observe a consistent reality. If the algorithm treats starting conditions of the data as a seed upon which everything else is dependent, the existence of the simulated beings as well as their simulated world could become inextricably connected. To explain what I mean, think of a random number generator on a computer. These only simulate true randomness; for any given seed, the generator always outputs the same sequence of numbers.

Elementary cellular automata provide us with a very visual way of thinking about this.

An elementary cellular automata, starting with a single black cell (Image from Wolfram MathWorld)

The same cellular automata after many iterations (Image from Wolfram MathWorld)

The algorithm behind elementary cellular automata is simple: you have a 1 bit array of cells. Starting from iteration T = 0, you determine the value of the cell in iteration T+1 by the values in the current cell, the cell to its left, and the cell to its right. For each of these automata there are 8 instructions, covering the 8 possible states of those 3 cells, and whether the cell should get a value of 0 or 1 in the next iteration. The triangle shaped structure in the image above is actually showing this array over time, with the top row representing T = 0, the next row T = 1, and so forth.

Now, this isn’t exactly a universe containing sentient beings, but you can see, visually, how great complexity arises from very simple starting conditions and instructions. If can imagine our own universe being something like this - with the “Big Bang” being the starting condition at the top, the subsequent expansion of space and formation of stars and galaxies would be analogous to the patterns arising at the bottom.

This might seem similar to my previous example in that it utilizes a 1 bit array with many variations, but the difference is that if you change the starting conditions at T=0 and/or rules even slightly, the result at T = <big number> changes so drastically that any conscious entities no longer exist, or are so fundamentally different that they wouldn’t be aware there was a difference - this is the reality that they have always lived with. So, it may be that our own universe works similarly - alternate realities with different rules or different values in the starting conditions don’t evolve life, or at least not you and me. If any observers evolve at all, they are observing a reality with a stable set of rules and data.

Perhaps Some Realities “Absorb” Anomalous Rules or Data

Still, one can envision that even for emergent, highly interdependent realities, there should still be an infinite number of anomalous (not essential to creating/preserving sentience) rules and data that threaten stability, and hence consciousness. For example, in the case of the elementary cellular automata, mathematicians always use an algorithm with have exactly 8 rules, but in the Omniverse, there are infinitely more automata that contain anomalous, possibly malignant rules that destroy or prevent sentience. Rules like “at T = 1 billion, set 1 billion cells to white,” or “when a certain pattern of cells appears, repeat that pattern for 1000 iterations,” are perfectly valid, because every possibility is valid.

The same must apply to an emergent reality like ours. What would this even look like? Stars suddenly disappearing or duplicating? Mysterious bursts of energy happening in the middle of nowhere? The probability that our reality consists of a small, essential set of rules without any anomalous outliers should be vanishingly small. It’s more likely that there are as many rules as there are subatomic particles (or whatever it is that constitutes the “data” in our algorithm).

It may be that some realities average out random anomalies faster than they can have a visible impact. There could be some built in redundancy of the data that patches over anything that changes too greatly - kind of like a RAID drive on an epic scale. Or maybe the the data organized in such a way that the part where sentients live is a tiny sliver, with the greatest proportion of it residing in some out of the way, non-essential place that gets disproportionately affected by the anomalies simply by virtue of its bulk.

Perhaps Anomalous Instructions are Often Fatal

This is similar to the emergent reality example above, where anomalies prevent sentience from appearing or evolving in the first place. It could be that sentience exists in a reality, but that anomalies tend to be so drastic as to always kill that sentience, leaving only realities where the anomaly didn’t happen. The realities that “die” are unaware and the ones that survive feel safe and secure.

I mentioned above the possibility of large scale changes like stars suddenly disappearing. Could it be that, somehow, our existence is inextricably dependent on these faraway entities - their exponentially decreasing gravitational pull, their emitted photons that reach us, or something else - and that without them, we, too, cease to exist?

There may also be innate ways an algorithm can shut down or halt if it is modified in ways beyond its essential instructions, much as DRM software tries to exit when an unauthorized user pirates it. So the realities that suddenly feature large segments of the universe being modified enter a halting state and observers cease to exist, leaving only those in realities with benign rules.

In either case, only realities where anomalies are benign or didn’t happen at all would have surviving observers. But there could be a sliding scale, where some anomalies are small enough to be observed and benign enough not to be harmful. It’s conceivable that these are hidden in our own reality, manifesting as entropy, background noise, sampling errors, or other unexplained phenomenon.

Our Survivorship has Warped Our Understanding of Physics

Imagine that we may be misunderstanding our entire system of physics such that 99% of the time, it wouldn’t even support life. Perhaps it’s a simple as a universal constant (speed of light, gravitational constant, Planck constant) that isn’t constant at all, but continuously varying either randomly or according to some pattern - only if it’s anything other than this one particular value, we all die. So not only is our very existence extremely improbable, but the observations of the nature of physics are colored in ways we can’t even perceive. We effectively only get to see a tiny percent of what actually happens in our universe because in the remainder, we die.

Simulated Realities are Inherently More Probable

Simulated beings, whether they know it or not, are also part of any parent simulator’s reality; in the case of our entity observing the white or black sky, the algorithm changes to one of subatomic particles and physical forces altering the voltages in a computer, with the same end result that they observe a white or black sky.

Going back to the thought experiment where we created a pleasant simulated world for our sentient being (the one with 2^M sensory inputs updating every millisecond), we discovered that there is only a 2 out of 2^(M * T) chance of it observing that reality - the one in the base omniverse (the orphan), and the one run by us. We could improve this chance by 1/2^(M * T) each time we run the simulation. If we run it 2^(M * T) times, over 50% of these simulated beings would observe the pleasant reality, and the rest something random. Running it enough times should get it much closer to 100% - because most of them are in our reality. Our poor sentient being is saved (usually).

Just as with our simulated example, there is also the chance thar this reality appears stable only because it is being fostered within some larger, more stable reality, by intelligences that decide not to include malignant rules or data. This may sound a bit like the “it was aliens all along” cliché, but remember, all possible realities exist. One thing I’ve learned over the years that if I ever believe I’ve thought of something truly novel, I’m probably wrong - someone else among the billions of people on Earth will have come up with it first. So it’s a certainty that this is occurring to some other intelligence somewhere, if not one simulating our own reality.

Now this doesn’t necessarily mean that our reality is running on Intel-based server farm or the like. It is probable that the parent reality wouldn’t have the same limitations as our own. It might not even have atoms, matter, energy, entropy, or physical forces as we think of them. It could have features more suited to computing the physical laws of it simulated worlds in a stable, consistent way over billions of years, at little cost or trouble to the beings managing it all.

Also worth noting: Just as a stable reality could increase the probabilities of stable child realities by fostering them in a simulation, an unstable reality could make things worse, increasing the chance that they are messing things up for their foster children. So it behooves any such intelligences to check that their own reality isn’t doomed to end on a sad note before condemning any child realities to the same fate.

Conclusion

I’ve tried here to present a number of different reasons why we might be observing this reality and not some totally random field of static. Obviously I’m a video game developer and not a scientist, but it would please me to think that I could give someone who is the inspiration to investigate things further.

All of science has been attempting to determine the essential rules of our reality since the beginning of human history, and we’re getting closer all the time. But I don’t think the kinds of anomalies I discussed here are something very many people have thought about or are looking for; generally they assume our universe is perfect, and it probably isn’t. I think it would be useful for those with the resources to start looking for verifiable anomalies in our universe’s algorithm or data. We could then begin to estimate how many hidden anomalies there are, much in the same way we can estimate hidden bug counts in software. At the very least this could potentially help us avoid existential disasters we never knew existed before. It might also help us to better determine the nature of our reality’s essential rules.

This concludes all I have to say on this topic, which is basically a setup for the next one. Hopefully I can get into what to me are the more exciting, science-fiction-like aspects of things. But I’m a busy guy, so please be patient - it’ll be awhile.